Hey everyone,

Quick question: When was the last time you needed test data and either:

Copied production data (and felt guilty about it)

Manually created 50 rows in a spreadsheet (and wanted to quit)

Skipped testing altogether because it was too much work

There is a better way. Let me show you how to generate realistic test data in about 2 minutes using AI.

The Problem With Test Data

You know this pain:

Scenario 1: You are building a new dbt model that calculates customer lifetime value. You need data to test it, but production has PII and needs approvals. So you either wait 3 days or just... skip proper testing.

Scenario 2: You are validating a data quality check. You need edge cases: nulls, duplicates, outliers. Creating this manually is mind-numbing.

Scenario 3: You are onboarding a new analyst. They need realistic data to practice on, but you can't give them production access yet.

Sound familiar?

The Solution: AI-Generated Synthetic Data

Here's what changed my workflow: ChatGPT (with Code Interpreter/Advanced Data Analysis enabled) can generate realistic test datasets in seconds.

Not random garbage. Realistic data with proper distributions, correlations, and edge cases.

Let me show you exactly how.

Example 1: Basic Customer Data

What you need: Test a pipeline that processes customer signups.

The prompt:

Generate a CSV with 1,000 rows of customer data:

- customer_id: unique integers starting at 1000

- signup_date: dates between Jan 2023 and Dec 2024, more signups in recent months

- email: realistic fake emails

- monthly_spend: amounts between $10-$5000, most between $50-$500 (realistic distribution)

- churn_flag: 15% should be true

- Make monthly_spend correlate with churn (lower spend = higher churn probability)

What you get: A downloadable CSV in 10 seconds with realistic patterns.

Why it's useful: The correlation between spend and churn makes your tests actually meaningful. You are testing with data that behaves like real users.

Example 2: Event Data with Edge Cases

What you need: Test data quality checks on event logs.

The prompt:

Generate a CSV with 5,000 rows of event data:

- event_id: unique UUIDs

- user_id: 200 unique users, some users have many events, some have few (realistic distribution)

- event_timestamp: timestamps in December 2024, some clusters of activity

- event_type: 70% 'page_view', 20% 'click', 10% 'purchase'

- Include realistic data quality issues:

* 5% null user_ids

* 2% duplicate event_ids

* 3% future timestamps (data quality errors)

* Some events out of sequence for the same user What you get: Test data that actually catches your data quality rules.

Why it's useful: You are not just testing happy path. You are testing the messy reality of production data.

Example 3: Time Series with Seasonality

What you need: Test forecasting or anomaly detection models.

The prompt:

Generate daily revenue data for 2 years (2024-2025):

- date: every day

- revenue: baseline $10,000/day with:

* Weekly pattern (weekends 30% lower)

* Monthly pattern (end of month spike)

* Holiday spikes (Christmas, Black Friday)

* Random noise (±10%)

* One anomaly: a 50% drop for 3 days in June 2024

- Format as CSV What you get: Realistic time series data with actual patterns to detect.

Why it's useful: Your anomaly detection needs realistic seasonality to test against, not just random numbers.

What you need: Test a star schema with proper joins.

The prompt:

Generate two related CSV files:

1. customers.csv (500 rows):

- customer_id, signup_date, plan_type (free/pro/enterprise), region (US/EU/APAC)

2. orders.csv (5,000 rows):

- order_id, customer_id (must exist in customers table), order_date (after signup_date), order_amount (higher for enterprise customers), product_category

- Make sure order patterns make sense:

* Enterprise customers have larger, less frequent orders

* Free customers have small, occasional orders

* Order dates must be after customer signup dates What you get: Two CSVs with referential integrity and realistic business logic.

Why it's useful: Your joins work, your business logic is testable, and you found that bug where order_date comes before signup_date.

Pro Tips for Better Synthetic Data

Specify distributions, not just ranges

Don’t just say: "ages between 18-65"

Say: "ages between 18-65, most between 25-45 (normal distribution)"

Add correlations

Don’t just say: "revenue and customer_count columns"

Say: "revenue should correlate with customer_count (more customers = more revenue, but not perfectly linear)"

Include realistic edge cases

Don’t just say: "product_name column"

Say: "product_name column, 2% should be null, 1% should have special characters that might break things"

Request specific data quality issues

Say: "Include 5% duplicates on order_id, 3% null customer_ids, 2% negative amounts (data errors)"

Ask for data validation

Say: "After generating, verify that all order_dates come after signup_dates and flag any violations"

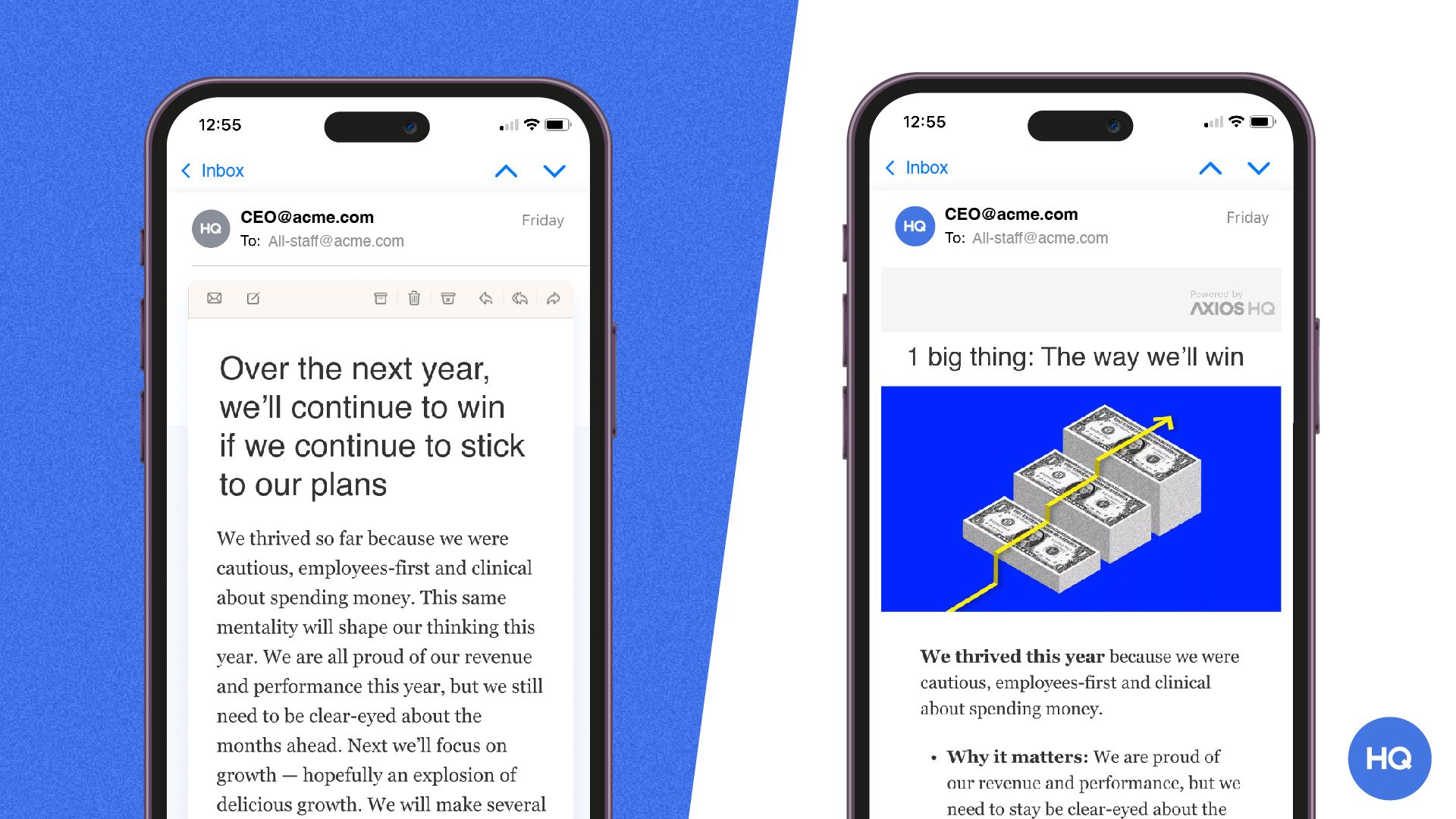

Attention is scarce. Learn how to earn it.

Every leader faces the same challenge: getting people to actually absorb what you're saying - in a world of overflowing inboxes, half-read Slacks, and meetings about meetings.

Smart Brevity is the methodology Axios HQ built to solve this. It's a system for communicating with clarity, respect, and precision — whether you're writing to your board, your team, or your entire organization.

Join our free 60-minute Open House to learn how it works and see it in action.

Runs monthly - grab a spot that works for you.

Common Use Cases

Here's when I reach for AI-generated test data:

Testing dbt models before deploying to production

Unit testing data quality checks

Training new team members without production access

Demos and presentations when you can't show real data

Load testing pipelines (generate 100K+ rows)

Testing edge cases that are rare in production

Prototyping dashboards before data exists

The 2-Minute Challenge

Right now, think of one thing you are working on this week that needs test data. Open ChatGPT (make sure Code Interpreter/Advanced Data Analysis is enabled). Write a prompt describing the data you need. Be specific:

What columns?

What distributions?

What correlations?

What edge cases?

Generate it. Download it. Use it.

Time yourself. I bet it's faster than whatever you were going to do.

What About Privacy/Compliance?

Q: Is this data truly synthetic? A: Yes. ChatGPT generates random data based on patterns you describe. No real user data is involved.

Q: Can I use this for production? A: No. This is test data only. Don't train models on it or use it for actual analysis.

Q: What if I need more control? A: For complex synthetic data generation (preserving statistical properties of real data), look at tools like Gretel.ai(who got acquired from Nvidia),

Your Turn

Try this once this week. Just once.

The next time you think "I need test data," spend 2 minutes with AI instead of 30 minutes copying production data or manually creating rows. Then hit reply and tell me:

What did you generate?

Did it actually work for your use case?

What surprised you?

I want to know if this actually saves you time or if I'm overselling it.

P.S. - If you want to get fancy, you can ask ChatGPT to generate the synthetic data AND write the Python script that created it. Then you can version control your test data generation and regenerate it anytime. But that's a topic for another newsletter...